YouTube’s Latest Move

YouTube, the renowned hub for online videos, is intensifying efforts to enhance transparency, particularly in the realm of AI-generated content. They’re doing this to tackle the rise of deepfakes and fake news spreading on the platform.

What’s Changing?

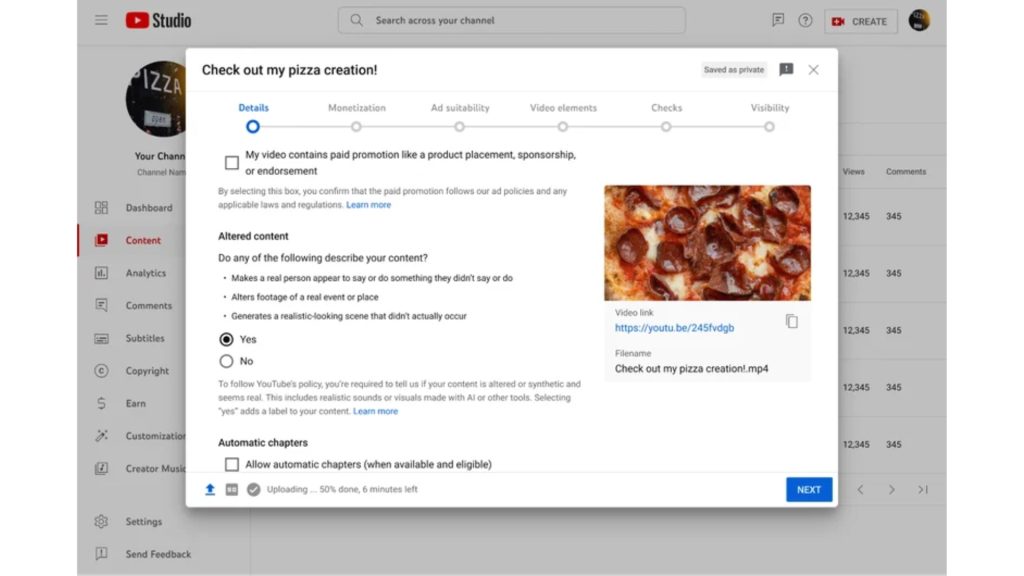

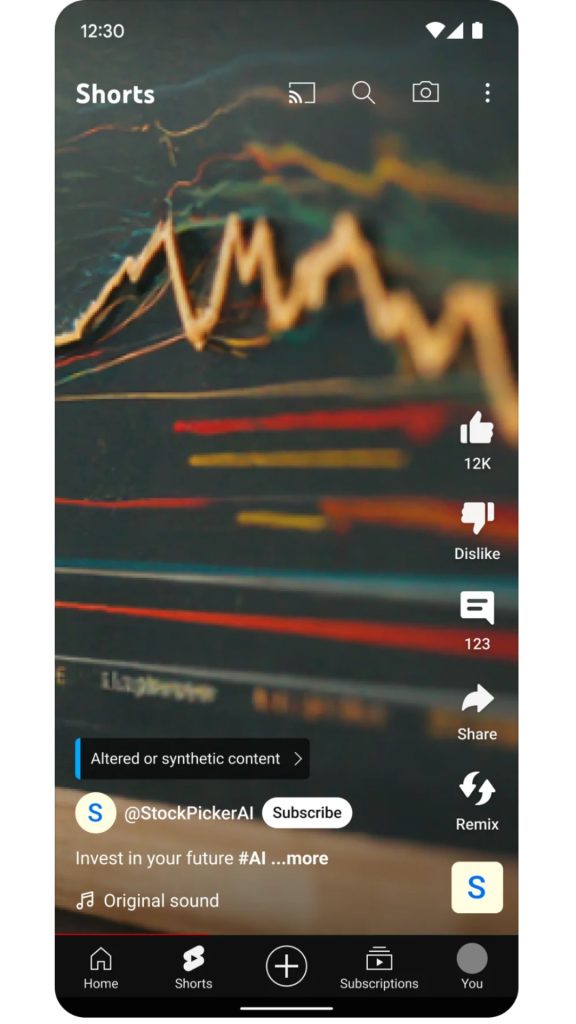

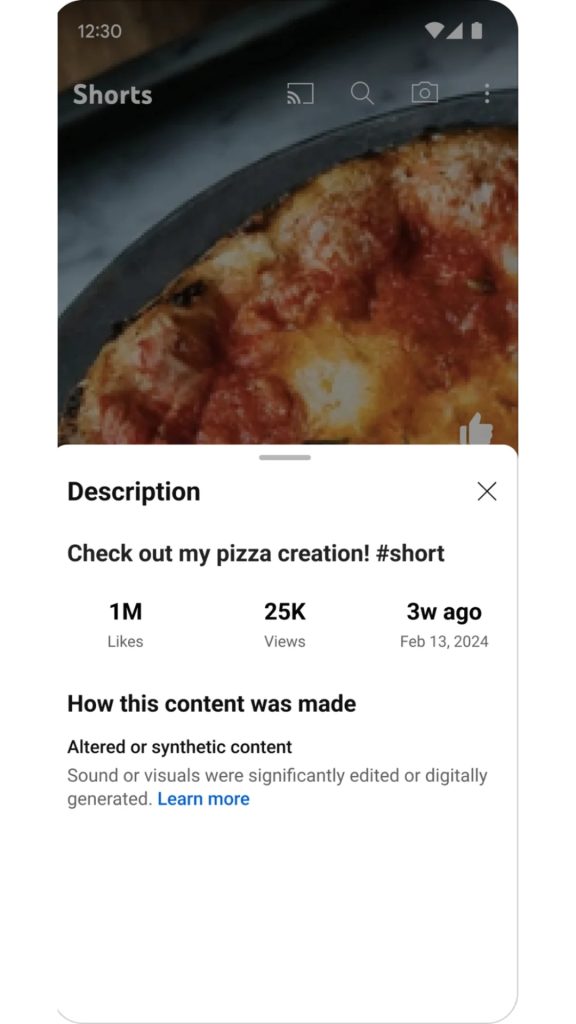

Now, when creators upload videos that look too real because of AI tricks, they have to tell viewers about it. This means checking a box during the upload process to say, “Hey, this isn’t real footage!” Then, YouTube puts a special mark on the video to show viewers it’s not genuine.

What Counts as AI-Generated?

YouTube’s rules cover a range of content, like videos that make people look real but aren’t, or videos that change real events or places using AI. However, not everything made with AI needs to be labeled. For example, if AI helps with scripts or production, it’s okay.

Keeping an Eye on Things

If creators forget to label their AI-created content, YouTube might do it for them. This helps keep things clear and trustworthy for viewers, which is important for YouTube.

Why Does it Matter?

YouTube wants to make sure people can trust what they see online. With so much AI being used, it’s easy for fake stuff to sneak in. These rules are meant to stop that, especially in things like political videos where misleading content can cause real harm.

Final Thoughts

YouTube’s new rules on AI transparency are all about making online content safer and easier to trust. But as AI keeps evolving, staying ahead of fake content will be a big challenge for everyone.